Okay I managed to find some time and wrote a very basic second tutorial that introduces the main concepts behind primitive rendering in DX10. This tutorial builds upon the base code of tutorial 1 so check that out if you haven’t already.

Also I need to mention that I’m not writing these tutorials for complete beginners, I expect you to at least have a very basic understanding of graphics programming and some of the terminology involved. I’m not going to go into a lot of detail regarding terms like culling, rasterizing, fragments etc.

One last aside before the tutorial, what makes DX10 different to DX and openGL is the removal of the fixed function pipeline. Now what the hell does that all mean? Well in directx9 and openGL, they had default ways of handling vertices, colors, texture co-ordinates etc. You’d pass through a vertex and a color and it would know what to do. It also handled lighting and other effects. In DX10 these defaults were removed and the core API has been simplified and reduced, this allows you to have full control over each pipeline stage and removes any past limitations present on things like the number of light sources and so on, but it has a tiny downside, the code complexity has increased a little.

If we take basic lighting for example, in the past a hobbyist could enable lighting with a few simple function calls and would get a satisfactory result and call it a day. Now for the same effect, the hobbyist would have to write all the pixel and vertex shaders necessary and make use of the phong (or other) reflection model equations to manually calculate the effect of lighting on the scene.

Basic Rendering and first steps into HLSL

Okay, enough of that, let’s dig in. To render something there are several steps we need to take:

- Generate (create) the vertices

- Send the vertices down to the pipeline

- Apply any transformations need to the vertices (vertex shader)

- Set the vertex color (pixel shader)

Seems pretty simple? If only. The first addition to the code is the loading of an HLSL (high level shader language) effect file; this file will define the vertex and pixel shaders we will be using. The contents of the effect file are:

<pre>matrix World;

matrix View;

matrix Projection;

struct PS_INPUT

{

float4 Pos : SV_POSITION;

float4 Color : COLOR0;

};

PS_INPUT VS( float4 Pos : POSITION, float4 Color : COLOR )

{

PS_INPUT psInput;

Pos = mul( Pos, World );

Pos = mul( Pos, View );

psInput.Pos = mul( Pos, Projection );

psInput.Color = Color;

return psInput;

}

float4 PS( PS_INPUT psInput ) : SV_Target

{

return psInput.Color;

}

technique10 Render

{

pass P0

{

SetVertexShader( CompileShader( vs_4_0, VS() ) );

SetGeometryShader( NULL );

SetPixelShader( CompileShader( ps_4_0, PS() ) );

}

}

Its pretty simple, the vertex shader applies the matrix transformation by multiplying the vertex by the world, view and projection matrices (to transform a vertex from model to world to view to projected space). We’ve declared these matrix variables in the FX file as globals. It then sends the tansformed vertex to the pixel shader which simply returns the vertex color to the rasterizer, which draws it out on our frame. We also define a Render technique which specifies which pixel/vertex shader programs we need to run for it. The effect file can contain multiple pixel/vertex shaders functions and techniques. Each technique can also feature multiple passes. NOTE: this is a very basic explanation of the pipeline and a great deal of detailed info is missing, i’ve left it out on purpose so as not to confuse you. For example I’ve left out that between the vertex and pixel shaders, primitives are constructed from the vertices and then a list of pixels (technically fragments) affected by each primitive is created. At a later stage I will write a tutorial on how the modern graphical pipeline works.

Loading the HLSL Effect File

Now to load the effect file we do the following:

if ( FAILED( D3DX10CreateEffectFromFile( "basicEffect.fx", NULL, NULL, "fx_4_0", D3D10_SHADER_ENABLE_STRICTNESS, 0, pD3DDevice NULL, NULL, &pBasicEffect, NULL, NULL ) ) ) return fatalError("Could not load effect file!"); pBasicTechnique = pBasicEffect->GetTechniqueByName("Render"); //create matrix effect pointers pViewMatrixEffectVariable = pBasicEffect->GetVariableByName( "View" )->AsMatrix(); pProjectionMatrixEffectVariable = pBasicEffect->GetVariableByName( "Projection" )->AsMatrix(); pWorldMatrixEffectVariable = pBasicEffect->GetVariableByName( "World" )->AsMatrix();

What the above code does is load the effect file and creates an effect from it, it compiles the effect file on load, so any syntax errors in the file will cause this step to fail, so catching the error here is extremely important!

Then we create a pointer to the “Render()” technique defined in the file. This technique is what we will use for rendering our primitives (techniques are defined in the HLSL FX file).

The last steps are to set up pointers to the effect matrix variables we declared in the effect file so we can update them during runtime. HLSL variables aren’t limited to just matrices, and there are lots of things you can achieve using HLSL but I’ll leave that research up to you!

So now we’ve loaded the effect file which defines the vertex and pixel shader parts of the pipeline, so now let’s deal with the input assembly stage. We define a basic vertex struct as follows:

struct vertex

{

D3DXVECTOR3 pos;

D3DXVECTOR4 color;

vertex( D3DXVECTOR3 p, D3DXVECTOR4 c ) : pos(p), color(c) {}

};

Now we need to tell DX10 and more specifically the technique how to handle this vertex type, this is done via an InputLayout. First thing we do is fill out an input element desc structure. This defines the format of the vertex. The parameters are present in the SDK docs, but the important one is the byte offset parameter, as you will notice for the second structure the offset is 12 since the first element consists of 3 x 4byte variables. What the input layout defines is how the memory that each vertex takes up must be split up, or alternatively how variables are laid out in memory.

D3D10_INPUT_ELEMENT_DESC layout[] =

{

{ "POSITION", 0, DXGI_FORMAT_R32G32B32_FLOAT,0, 0, D3D10_INPUT_PER_VERTEX_DATA, 0 },

{ "COLOR", 0, DXGI_FORMAT_R32G32B32A32_FLOAT,0, 12, D3D10_INPUT_PER_VERTEX_DATA, 0 }

};

The next step is getting the description of the pass and creating the input layout. Since each pass in a technique will take in the same input format, we just get the first pass in the technique and use that to create the input layout. Once the layout is created we set it as the active input layout. This is important since you may need to process multiple types of vertices and may need to swap input layouts as needed.

UINT numElements = 2; D3D10_PASS_DESC PassDesc; pBasicTechnique->GetPassByIndex( 0 )->GetDesc( &PassDesc ); if ( FAILED( pD3DDevice->CreateInputLayout( layout, numElements, PassDesc.pIAInputSignature, PassDesc.IAInputSignatureSize, &pVertexLayout ) ) ) return fatalError("Could not create Input Layout!"); // Set the input layout pD3DDevice->IASetInputLayout( pVertexLayout );

Vertex Buffers

Phew, nearly done with the initialization, the last major thing we need to do is create the vertex buffer. The vertex buffer is a buffer that stores our vertices before pumping them through to the GPU for processing, think of it as a waiting line for a carnival ride, people queue up until the ride is ready and then they get on, while the ride is busy, more people will queue up and so on. The vertex buffer is stored in video memory and so we cannot directly access or modify it.

So let’s create the vertex buffer. As expected the first step is filling out a DESC structure, the important parameters are the usage parameter which specifics what type of buffer to create, we want to be able to update it during runtime so we make it a dynamic buffer, and the CPUaccessFlag which specifies the type of access the CPU is granted to the buffer, again since we wish to update it during runtime, we allow writing by the CPU.

Then we create the vertex buffer and set the Input Assembler to use it. The parameters and their descriptions are once again found in the SDK documentation.

//create vertex buffer (space for 100 vertices) //--------------------------------------------- UINT numVertices = 100; D3D10_BUFFER_DESC bd; bd.Usage = D3D10_USAGE_DYNAMIC; bd.ByteWidth = sizeof( vertex ) * numVertices; //total size of buffer in bytes bd.BindFlags = D3D10_BIND_VERTEX_BUFFER; bd.CPUAccessFlags = D3D10_CPU_ACCESS_WRITE; bd.MiscFlags = 0; if ( FAILED( pD3DDevice->CreateBuffer( &bd, NULL, &pVertexBuffer ) ) ) return fatalError("Could not create vertex buffer!");; // Set vertex buffer UINT stride = sizeof( vertex ); UINT offset = 0; pD3DDevice->IASetVertexBuffers( 0, 1, &pVertexBuffer, &stride, &offset );

Setting up the Rasterizer

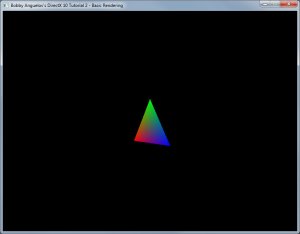

In my example I render a spinning triangle, but by default DX10 enables backface culling, this results in my triangle being visible only half the time so I want to disable that, since culling occurs during the rasterizer stage I need to create a custom rasterizer state and set the pipeline to use that:

D3D10_RASTERIZER_DESC rasterizerState; rasterizerState.CullMode = D3D10_CULL_NONE; rasterizerState.FillMode = D3D10_FILL_SOLID; rasterizerState.FrontCounterClockwise = true; rasterizerState.DepthBias = false; rasterizerState.DepthBiasClamp = 0; rasterizerState.SlopeScaledDepthBias = 0; rasterizerState.DepthClipEnable = true; rasterizerState.ScissorEnable = false; rasterizerState.MultisampleEnable = false; rasterizerState.AntialiasedLineEnable = true; ID3D10RasterizerState* pRS; pD3DDevice->CreateRasterizerState( &rasterizerState, &pRS); pD3DDevice->RSSetState(pRS);

NOTE: you may have noticed that all the d3d device function are preceded by a label for which stage of the pipeline they affect, pretty nice of the developers to do that 😛

Okay wow, we’ve gone through a lot just to set up the pipeline to render, and we havent even rendered anything yet. Jeez! I’m sure you openGL kids are screaming murder at this point, and I agree it is a bit more effort but to be honest after working in DX10 for the last couple of weeks I cant even imagine going back to openGL, while openGL might require less code (to do the basic), its so much messier and for more advanced operation the code in openGL quickly spirals out of control.

Filling the Vertex Buffer

Now the meat and potatoes of the whole tutorial, updating the vertex buffer. As I mentioned the buffer is stored in video memory, and we cannot access that block of memory, what we need to do to access the buffer is to map it. What mapping does is allocate a block of main memory of the same size as the vertex buffer, we can then fill this memory with our values and then upon unmapping it, the data gets transferred back to the video card overwriting the original vertex buffer.

The map function returns a pointer to the first block of memory in our mapped vertex buffer and we need to use pointer arithmetic to move the pointer through the buffer. I’ve used a “discard” flag, which simply means that any previous data in the buffer must get discarded, if the buffer was busy being used to feed vertices into the pipeline, the map call will return a pointer to a new empty block of memory and discard the previous block once its finished being used. There are various other flags that I’ll probably cover in later tutorials but for now lets leave it at that.

Using our return pointer we set three vertices. We then unlock the buffer.

//fill vertex buffer with vertices UINT numVertices = 3; vertex* v = NULL; //lock vertex buffer for CPU use pVertexBuffer->Map(D3D10_MAP_WRITE_DISCARD, 0, (void**) &v ); v[0] = vertex( D3DXVECTOR3(-1,-1,0), D3DXVECTOR4(1,0,0,1) ); v[1] = vertex( D3DXVECTOR3(0,1,0), D3DXVECTOR4(0,1,0,1) ); v[2] = vertex( D3DXVECTOR3(1,-1,0), D3DXVECTOR4(0,0,1,1) ); pVertexBuffer->Unmap();

Input Assembly

The next step is telling the Input Assembly what type of primitive we’re drawing using the set topology function. A topology defines how the vertices in our buffer are connected, if we use a line list, a line will be drawn for each two vertices in the buffer, a triangle list will draw a single triangle for every three vertices and so on… More detail on the various topologies can be found in the SDK docs.

// Set primitive topology pD3DDevice->IASetPrimitiveTopology( D3D10_PRIMITIVE_TOPOLOGY_TRIANGLESTRIP );

Meet the Matrices

Damn I got side tracked, onto the rendering, first step lets create a world matrix, this defines the position and orientation of the object in the world.

//create world matrix static float r; D3DXMATRIX w; D3DXMatrixIdentity(&w); D3DXMatrixRotationY(&w, r); r += 0.001f;

then we update the effect variables, using the pointers we created earlier. NOTE: this does not actually update the values on the GPU but rather stores the values in temporary variables until the technique’s apply() function is called.

//set effect matrices pWorldMatrixEffectVariable->SetMatrix(w); pViewMatrixEffectVariable->SetMatrix(viewMatrix); pProjectionMatrixEffectVariable->SetMatrix(projectionMatrix);

Drawing the scene (finally) 😉

DX10 can only have one active vertex buffer at a time so if you have multiple buffers remember to load the correct one before you do any draw calls. The fact that there is an offset hints that you can store multiple objects in a single buffer and then draw them all using mutiple consecutive draw calls.

Finally using our technique pointer we get a description of it and then process all the passes present in the technique. For each pass, we send through the vertices present in the vertex buffer using the draw command specify the number of vertices to draw and the start index in the vertex buffer. Before we can draw anything we need to call the apply function on the technique, what this does is send all the technique data that we had previously set (matrices, etc) to the GPU. If you set a matrix and dont call apply before drawing, the matrix will not be updated!

//get technique desc

D3D10_TECHNIQUE_DESC techDesc;

pBasicTechnique->GetDesc( &techDesc );

pWorldMatrixEffectVariable->SetMatrix(w);

pViewMatrixEffectVariable->SetMatrix(viewMatrix);

pProjectionMatrixEffectVariable->SetMatrix(projectionMatrix);

for( UINT p = 0; p < techDesc.Passes; ++p )

{

//apply technique

pBasicTechnique->GetPassByIndex( p )->Apply( 0 );

//draw

pD3DDevice->Draw( numVertices, 0 );

}

So that’s it, we’ve reached the end of the second tutorial, we’ve covered a lot of ground and I apologize that I haven’t gone too in depth regarding the functions and their parameters but I don’t have the time right now for in depth tutorials and I feel that a little bit of research and self study would be more beneficial than me spoon feeding you in these tutorials.

I’m actually hoping that these tutorials may serve as just a reference for your own code or just give you ideas and insight for your own projects.

Source Code

The source code and VS2k8 project for the tutorial can be found here: GitHub

“DX10 can only have one active vertex buffer at a time so if you have multiple buffers remember to load the correct one before you do any draw calls”, we can load upto 16 input vertex buffers in slots, “IASetVertexBuffers” method takes an array of input buffers

yes, you are correct, but what i was trying to get is that people often have multiple separate buffers that they swap then draw. That array of buffers acts as a single large buffer, that you can draw in a single step.

my wording hasn’t made that point too clear, also remember these are beginner tutorials and so i don’t want to have to explain multiple buffers each with varying stride lengths and so on…

Hey, any idea how to use the draw call in the case of multiple buffers (basically to draw from any of vertex buffers loaded)

Im having severe issues getting this to work, ive known direct x since before 8 and ive always had it up and running, but d3d10 is being a total pineapple!

I get blank screens and all sorts of problems when I modify my input element desc, It cant be my fault as im quite experienced with all previous versions of direct 3d.

Do you know what could be my problem?

you shouldn’t be modifying the input element desc. you only create it once and that’s it, you then use that desc struct to create the input layout which is extremely important for the pipeline.

Thanks for this tutorial, it helped a lot with (finally) picking up dx 10 and HLSL.

I managed to add a simple forwarding geometry shader too, in case someone is interested (changed names a bit):

[maxvertexcount(3)]void TheGeometryShader( triangle PixelShaderInput input[3], inout TriangleStream triStream)

{

//input[0].

triStream.Append(input[0]);

triStream.Append(input[1]);

triStream.Append(input[2]);

}

Hi Bobby,

I recently just stumbled onto this tutorial, and it was of great help to me with trying to figure out shaders. I have a question that I feel should be glaringly obvious, but I cannot figure it out: how can I do a vertices transform on several objects? Right now, I have two cubes and through the shader, I am able to transform their vertices and rotate them…but I can’t do it separately. I’d like one to rotate one way, and the other to rotate another way, yet I am unable to do that. Do you have any suggestions?

Thank you so much,

Xixia

You have left in some html